AI agents are widely used in numerous industries since they can independently and smartly streamline operations. Are you wondering how you can make the most of AI to grow your business? Here, you will learn the most effective AI agent practices and ethical considerations to deploy them. Keep reading.

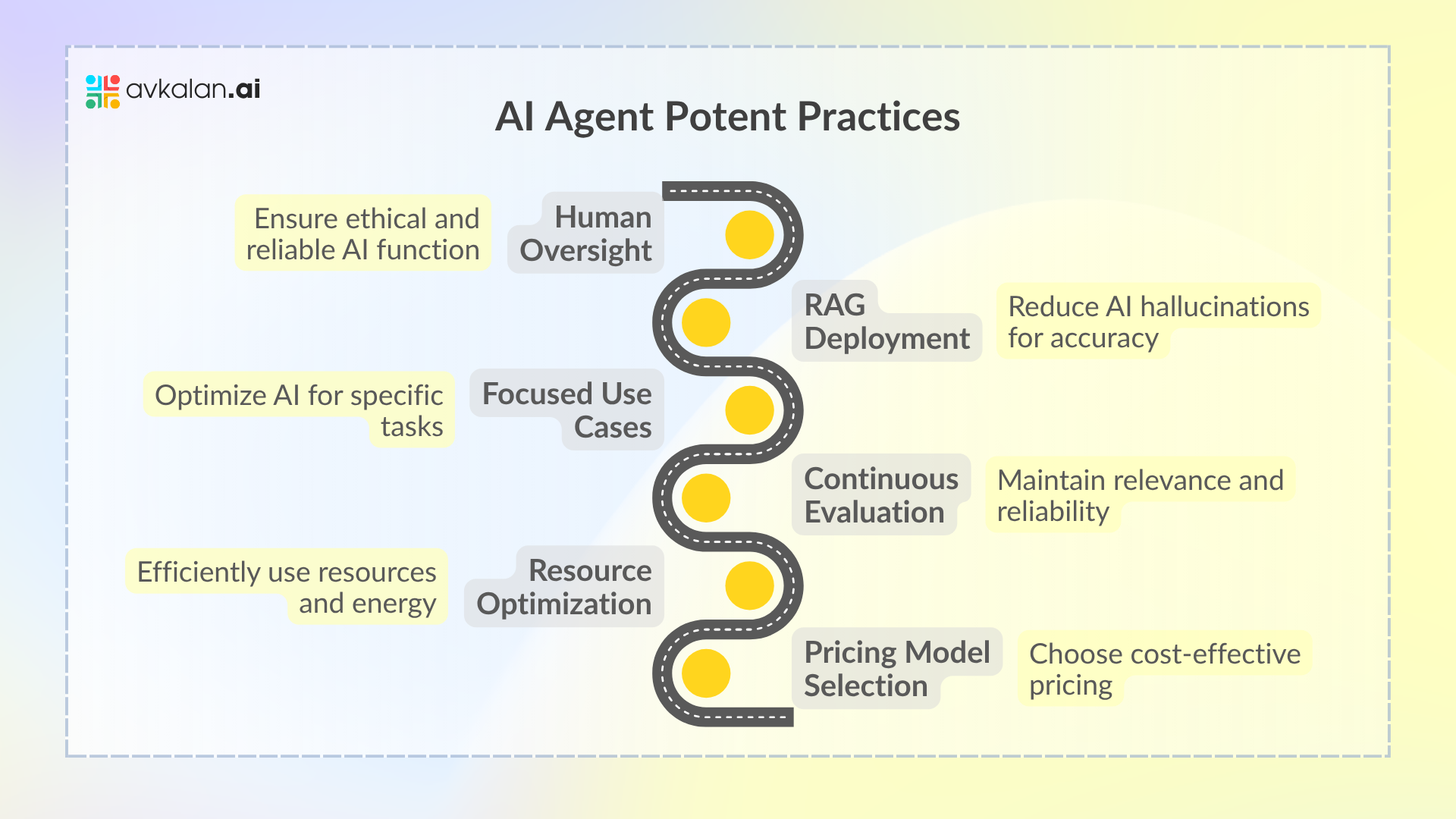

AI Agent Potent Practices

Below are the most effective strategies to successfully implement AI agents:

- Use Human Oversight to Sustain Control : AI can deliver incorrect output because of hallucination. AI agents may experience downtime, make unexpected decisions, show favor, and share possibly dangerous information that should be overseen. Human oversight ensures AI systems function ethically within restrictions. You can eliminate errors, combat risks and build trust in AI implementation by controlling AI processes. AI agent implementation strikes the perfect balance between human involvement and automation.You may also implement Human-in-the-loop (HITL) and Human-on-the-loop (HOTL) for effective human supervision. HITL systems use humans at all vital decision points. HOTL systems need humans to intervene and manage as required. The integration of human efforts and AI agent operations ensures more reliability and trust in systems. The hybrid model helps in improving outcomes and maintaining control and accountability.

- Deploy Retrieval Augmented Generation (RAG) to Diminish Hallucinations : AI may give wrong answers even after having a clear reply to the human eyes due to AI hallucinations. It passes on wrong answers as correct if AI hallucinates. As AI agents are independent and make quick decisions, such errors can dissolve trust, cause misinformation, and ruin the decision-making process. So, you should decrease hallucinations in AI agents to the maximum by deploying Retrieval Augmented Generation (RAG), as it’s a technique that ensures updates and reliable AI outputs.Numerous businesses use RAG to decrease hallucinations to a large extent in AI agents and get accurate results. For instance, Chatsonic, a well-known AI marketing agent, performs RAG at the top of the web and user-uploaded documents for genuine responses. It will save you from the hassles of separately using RAG and ensuring perfection across the AI process.

- Initiate With Focused Use Cases for the Maximum Output : You should decide which processes you should use AI for before implementing AI agents. It will help you in choosing purpose-driven AI agents rather than normal ones. AI agents built for certain tasks tend to outperform general-purpose competitors. Focused AI agents can be well-trained and optimized for certain scenarios that help them offer the required solutions. Whereas general-purpose AI agents require further training and may struggle to effectively fulfill niche requirements.For instance, Chatsonic is built exclusively for marketing activities and personalizing content, drafting ad copies, and social media strategies. You will get similar results with a general-purpose bot, like ChatGPT. However, they will be more generic and poor quality. You can escalate the impact of AI implementation with a clear use case and minimize its complexities simultaneously.

- Evaluate Continuously for Reliability : You can’t afford to deploy an AI agent and forget it. Consistent evaluation is crucial to ensure accuracy, relevance, and reliability, mainly in a dynamic environment. AI agents interact with the changing user needs and datasets. They are likely to get inconsistent, outdated, or exposed to errors without frequent assessments. Consistent AI evaluation has its challenges such as maintaining the same standards across evaluation and managing large volumes of interactions and data.Many organizations use LLM-based and human-based evaluation strategies. You can use end-user feedback and crowdsourcing to manage huge query volumes in human-centric evaluation. Such processes still need a good amount of time and resources. Try techniques, like “LLM as a judge” for reliable and quicker output assessments. LLMs assess the AI agent’s output consistently, which offers a scalable and trusted technique to evaluate AI outputs than manual assessments.

- Use Resources Effectively to Save Cost : AI agents require more resources and energy because they are more advanced. You should be aware of resources and energy utilization depending on your costs and ethical considerations when implementing AI agents. Many international organizations prioritize AI resource optimization the most.You can optimize the resource utilization of AI agents in various ways. One such way is implementing lean AI methods, which involve building lightweight AI models to deliver exceptional performance and minimize computational requirements. Lean AI targets efficiently getting results by eliminating unnecessary complications. Fine-tuning pre-trained AI agents is another effective strategy where you use them for certain apps rather than training a new model, which reduces the utilized resources in training.

- Select the Correct Pricing Models : AI agent implementation can be a big investment considering the implementation costs, maintenance, and utilization. By selecting the correct pricing model, you can make the AI technique practical and save costs.You can select between both user-based and subscription-based models. User-based models are flexible, which helps companies to pay only for the consumed resources, which benefits companies with unpredictable or fluctuating demands. Subscription-based plans offer predictable monthly costs, ideal for businesses with steady workloads. Usage-based models are highly flexible, allowing companies to pay only for the resources they consume. This is particularly beneficial for organizations with fluctuating or unpredictable demands.

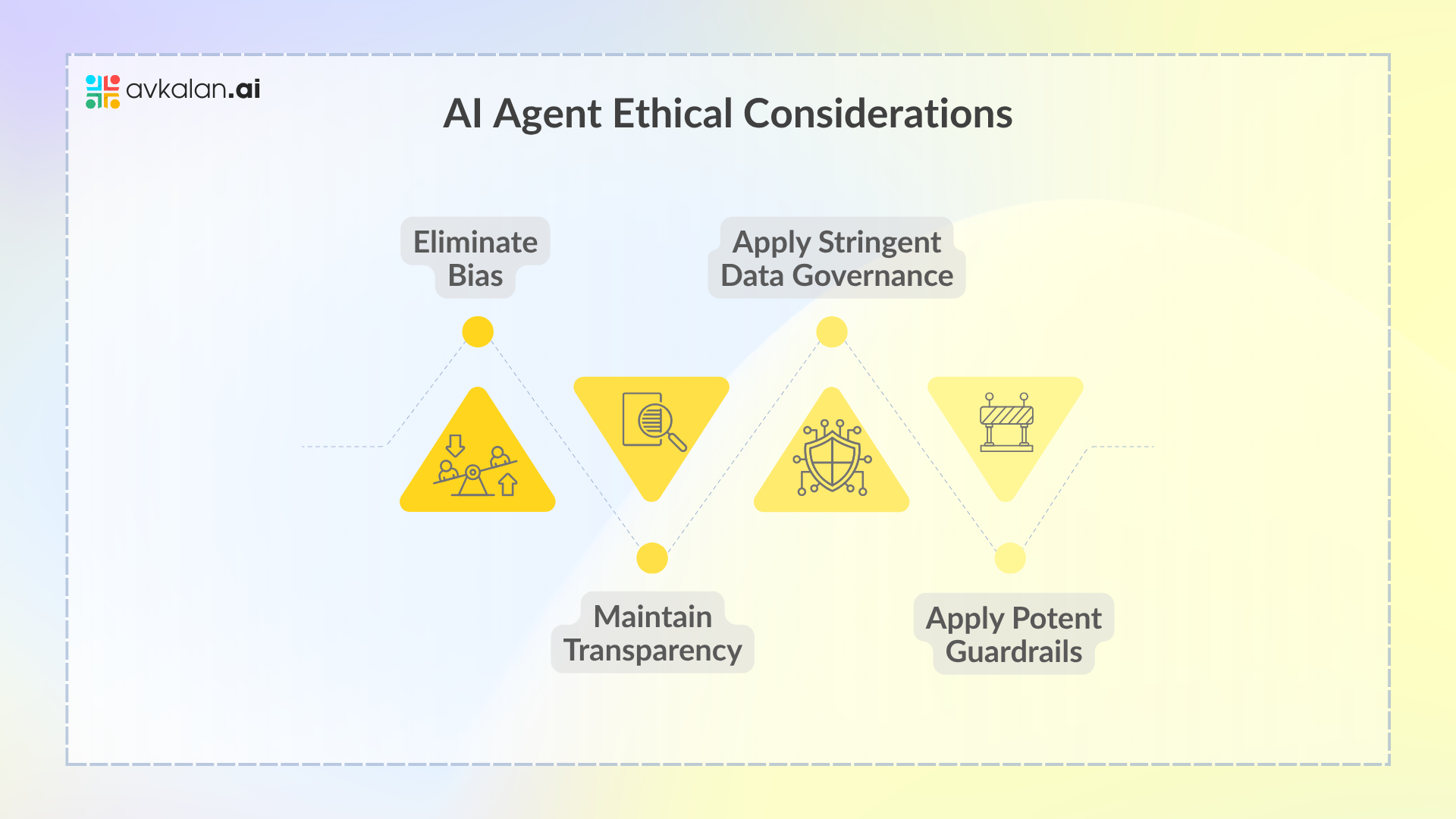

AI Agent Ethical Considerations

Besides following the best practices discussed above, you should do these ethical considerations when deploying AI agents:

- Eliminate Bias Via Thorough Testing : AI agents should build a healthy setup that is free of ideologies, hate speech, and discrimination. They are exposed to biases even without having emotions. AI models get trained on big datasets and reflect biases, which can be encoded in AI systems and deliver outputs reflecting stereotypes. A hiring data-trained AI recruitment tool may prefer specific demographics over others because of training data patterns. Many businesses are building tools that train AI agents and scan outputs to eliminate such biases.Confirm the datasets used to train AI agents in diverse cultures, demographics, and environments. Perform consistent evaluations to find out and fix biased outcomes. Implement strategies, like adversarial debiasing, as it trains AI agents to diminish representation learning or biases. Build AI systems that may clearly define decision-making methods. Such transparency helps users in ensuring accountability and identifying possible biases.

- Maintain Transparency : Transparency is necessary when implementing AI systems since it builds trust. Stakeholders, employees, and customers should stay informed about how and where AI is used across your company.

- Apply Stringent Data Governance : AI data usage and rights are a matter of concern from both legal and ethical perspectives. The requirements for strict AI data governance are unavoidable. When it comes to deploying AI agents into your system, you should review data sources, add protection to data, and increase transparency.Regular checking and documenting the datasets’ origin utilized to train AI agents ensure they fulfill legal and ethical obligations. You should use encryption and access controls to protect your sensitive data against hacks. Communicate to users about how their data is used, be it for generating insights or training AI. Associate with policymakers and industry leaders to create AI governance structures.

- Apply Potent Guardrails : You can implement guardrails, a set of guidelines or rules, which ensure the AI agent system functions with specific rules and guidelines, including legal rules, ethical practices,, and guidelines. Consider them as restrictions that AI agents shouldn’t cross. AI agents are independent and make decisions and execute actions with no human efforts, which exposes them to risks, such as breaching ethics and delivering hazardous outcomes.Guardrails combat risks and protect both your organization and users. You can make the best use of AI agents and diminish the risks of undesired outcomes. To use guardrails, limit restrictions to confirm the AI doesn’t supersede its intended actions. Follow a set of ethical rules to control AI actions, such as non-discrimination, and transparency. Consider using keyword filtering and pre-trained models to block unwanted outcomes. Implement fallback options, like redirecting choices to human managers during uncertain situations.

The Bottomline

Implementing the most effective practices and ethical considerations becomes crucial as an increasing number of companies are using AI agents in operations. Hopefully, you have learned them in detail in this post. Selecting the right AI partner can help you in defining your company’s AI policies and achieve consistent success. Besides following the best practices and ethics, you should also implement reliable AI agents to protect data and eliminate complications in operations.